Mastering Marketing Discrepancies: A Guide to Monitoring Paid Media Metrics

Discrepancies in attribution data are inevitable. This guide will walk you through the steps to effectively measure and monitor reporting discrepancies, using tools like Rows and Google Analytics.

Before we get started: I’m excited to share that this article is sponsored by Rows, a modern spreadsheet with out-of-box connectors to marketing sources. In this piece, we’ll cover how to monitor discrepancies with both Rows and other tools.

What are marketing discrepancies, and why monitoring is the solution

You cannot escape marketing discrepancies

Two things are commonplace in paid media measurement:

A paid media campaign’s results are measured across multiple sources. Conversions are tracked on the ad platform and—also—on the other tools used by your marketing and product team: Google Analytics, Snowplow, Mixpanel, Amplitude, etc.

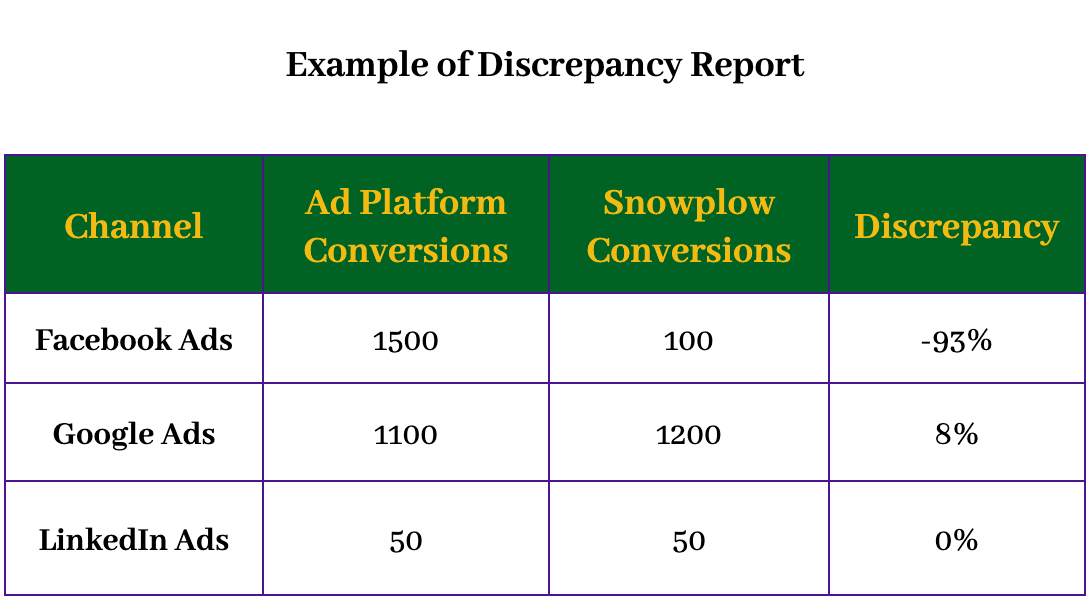

The number of conversions, attributed to a certain campaign, varies depending on the source. This variation is known as a discrepancy.

The combination of marketing strategy with marketing sources can lead to different degrees of discrepancy. In certain cases, you can expect very high discrepancies (e.g. Meta CAPI + Snowplow) or relatively low (e.g. Google Ads + Google Analytics).

That’s because each marketing source attributes conversions differently: we covered why that happens in this previous article.

As much as finance and data teams would like, unfortunately, discrepancies cannot be “extinguished”. So, the best you can do is measure, monitor and learn to live with them.

Measuring discrepancies can shine a light on performance and tracking errors

When we refer to “measuring discrepancies”, we refer to comparing attributed conversions from a certain channel or campaign across multiple sources. For example, measuring how many attributed conversions are reported for paid search campaigns on both Google Ads and Snowplow.

The size of the discrepancy can tell you the following about the performance of your paid media campaign:

Tracking errors, like underfiring or overfiring of events

Lack of measurement due to consent flows

Percentage of impressions and clicks served on mobile devices

Bot traffic

Impact of time-to-conversion in lookback windows

Audience overlap with other strategies

Monitoring discrepancies functions as an alert. If a discrepancy between two sources (or campaigns) increases beyond your benchmark, you should dive in and research if one of the above has changed.

Has there been a surge in bot traffic? Is something broken with the tracking? Is your campaign reaching users who are converting via other sources? Those are all possible causes for an increase in discrepancies.

Has there been a surge in bot traffic? Is something broken with the tracking? Is your campaign reaching users who are converting via other sources? Those are all possible causes for an increase in discrepancies.

Discrepancy monitoring is dependent on UTM naming conventions

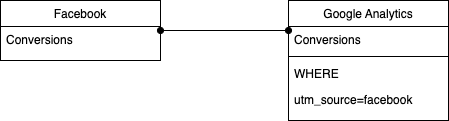

Measuring discrepancies involves joining two disparate data sources. Traditionally, most data sources (from ad platforms to website analytics) rely on URL queries for measuring conversions. Although there’s been a move to server-side, click-based attribution is still the bread and butter of how tools from Google Analytics to Snowplow measure conversions.

That means that if you want to measure Facebook results on Google Analytics, you need to add a query in the URL that allows you to isolate Facebook traffic. Commonly, something like utm_source=facebook.

However, discrepancies don’t exist solely at a channel level. Discrepancies could be higher for a certain campaign (e.g. which is running in a specific market with tougher consent rules), ad group (e.g. which is targeting an audience that has a high overlap with other strategies) or ad (e.g. which is being mostly clicked on mobile devices).

To expand the granularity of your discrepancy reporting, you must include unique identifiers for the different levels in your URL. This is what I recommend to clients:

Rely on IDs. These are consistent and unique. I also enjoy that they don’t pass too much information to the user. As a marketer, UTM fields like US_VisitedOnce_HighValue_Brand_BroadMatch give me the irk. But that could be just me.

Pass values dynamically. Both Google and Meta support URL macros that automatically append Ad IDs and the likes to your landing page URLs. This diminishes the human error probability.

Here’s an example of how I’ve measured discrepancies between Ad Platform, Ad Server and Website Analytics for a client:

Different set-up options for building discrepancy reports

There are three decisions to make before getting started on measuring and monitoring discrepancies: 1) Where will the data be stored? 2) How will you extract the data from each source? 3) Where will this data be accessible / visualised?

I will cover three set-up options for building discrepancy reports.

Data Warehouse + ETL + BI

This is the most technically complex set-up option, and will likely have to be done by your marketing analytics or data platform team. However, you probably won’t need to pay for any tools because your data team likely already owns all the contracts.

In this case, the data is:

Sourced from your ad platforms by using an ETL, like Fivetran or Airbyte.

Stored in your data warehouse. In this scenario, I assume you’re already storing your product analytics data (e.g. Google Analytics, Amplitude, etc) in your data warehouse. The data is then transformed and joined also in your data warehouse.

Exposed in your BI tool of choice, like Looker, Tableau, Hex, Lightdash, etc.

Offline Spreadsheet + Manual export

This one is probably the one most people will start with. But, as it’s manual work, it can lead to human errors and difficulty sharing insights with other stakeholders.

In this case, the data is:

Sourced manually by exporting reports from each tool from the UI.

Stored and visualised directly on your Offline Spreadsheet, like Excel.

Since this one uses the UI for reporting, there are also no incurred additional costs. But this option is difficult to scale.

Online Spreadsheet + ETL

This one is more scalable than the Offline Spreadsheets and can be executed by a marketer end-to-end. Both are great news.

In this case, the data is:

Sourced from your ad platforms and product analytics by using a Spreadsheet-friendly ETL, like Supermetrics or Funnel.io.

Stored and visualised directly on your Online Spreadsheet, like Google Sheets. Airtable, for example, also has additional ETL integrations, like Airflow.

This can be a great choice for an agency, but Spreadsheet-friendly ETLs can be pricey. If you require Linkedin Ads data, Supermetrics starts at $159 per month. However, it’s worth considering if you need to measure discrepancies for less popular ad platforms, like Shopify Ads.

Modern Spreadsheet

Modern Spreadsheets come with out-of-box integrations so you can mimic the set-up above but with one centralised tool.

In this case, the data is:

Sourced, stored and visualised directly on your Modern Spreadsheet, like Rows.

Rows has out-of-box ETL integrations with some of the most popular Ad Platforms: Linkedin, Google, TikTok, Meta. It’s easy to get started (as we’ll cover with templates in the next section), but, at the time of the writing, certain ad platforms (like Apple Search Ads, Campaign Manager, Amazon Ads, etc) were not yet supported. If you require one of these, you must select one of the alternative set-up options.

Summarising Options

Choose which set-up works best for your discrepancy reporting based on: the skillset available (data team resources or not), existing contracts, price, scalability and data sources required.

Rows templates for measuring discrepancies

As part of the sponsorship, I’ve created templates with Rows that automate the most common types of discrepancy measurement. These templates are for measuring channel-level discrepancy, but you can use the same logic to build further granularity into the report if your URL naming convention allows it:

Access the templates below:

Get started by:

Creating an account on Rows, if you don’t already have one.

Follow the link to the template you want to install (e.g. Google Ads). Click Use Template.

Pick the destination (folder) for the template.

Once you land on the template, you’ll be asked to connect your data sources. In this case, GA4 and Google Ads.

Once that is done, in the Input Data Page:

Add your naming conventions for the UTM parameters. This filters only Google Ads conversions within GA4.

Add the name of your conversion event in GA4. This filters only the same conversions you’re measuring on Google Ads.

Pick a date range for the analysis.

The Report page will give you a daily analysis of the conversion discrepancies between both channels. I’d recommend to set it to automatically update the data at a weekly cadence.

Moving forward with discrepancy reporting and monitoring

Review UTM naming conventions to increase reporting granularity

The first step for measuring discrepancies is to review your UTM naming conventions and your campaigns’ taxonomy. This article on naming conventions can help you ask the right questions about how to structure your campaigns and what values to include where.

Use alerting to be notified of discrepancy changes

Once you’ve set up your discrepancy reporting and calculated your discrepancy benchmarks, alerting is very helpful. It avoids unnecessary investigation until the discrepancy crosses a specific threshold.

You can use Zapier (via Rows or Google Sheet) to automatically create a ticket on Linear or Asana for your team to check tracking issues. I’ve previously set up a Slack alert for a big programmatic advertiser that enabled them to identify wrapping errors and possible ad fraud:

Learn about the intersection of marketing and data

If you’ve enjoyed reading this article, don’t forget to subscribe to 021 Newsletter (for free!). I write about all-things-marketing-data like attribution, growth models, data activation and more.