When marketing tracking breaks: my process for troubleshooting conversion events

I cover how to identify where tracking failures occur, tools that can help you with this, and how to build systems that make future troubleshooting smoother.

First of all, I wanted to thank Yuval Inselberg (Infera Labs, ex-Meta), Milan Rudez (Smarly.io), Ofer Milller (TestGorilla), Alice Bedward (Insify) and Brijesh Bharadwaj (Segwise) for giving feedback to this article!

The Not So Hidden Cost of Broken Tracking

Ever had that sinking feeling when your CMO asks why Facebook is reporting 5 conversions while your CRM shows 75? Or why your dashboards suddenly show conversion rates plummeting overnight? Welcome to the world of broken marketing tracking, where decisions stall and ad spend efficiency suffers.

In this guide, I'll walk you through my process for diagnosing and fixing marketing tracking issues. By the end, you'll understand:

How to systematically identify where tracking failures occur

Which debugging tools can help for different ad platforms

How to build systems that make future troubleshooting easier

Ways to establish trust in your data across teams

This isn't theoretical. These approaches have helped clients managing $1M+ media budgets across fintech, e-commerce, B2B SaaS, and other industries where data reliability directly impacts business outcomes.

The Algorithm Advantage You're Missing Out On

When campaigns perform well, tracking systems get ignored. But watch how quickly "let's check the tracking" becomes the rallying cry when numbers disappoint. This pattern repeats across almost every marketing team I've worked with.

In today's ad ecosystem, broken tracking isn't just annoying: it's inefficient. Modern ad platforms have moved beyond manual targeting to algorithm-driven optimization that relies on conversion signals to function. When tracking breaks, you're essentially forcing platforms back into manual mode, abandoning the sophisticated AI and machine learning technologies that make them effective.

Modern ad platforms have moved beyond manual targeting to algorithm-driven optimization that relies on conversion signals to function

Think about it: Meta's algorithm can process thousands of audience signals and bid adjustments per second, but only if it knows what a conversion looks like. Google's Smart Bidding can optimize for your specific business outcomes, but it needs clean conversion data to learn from. Without reliable tracking, you're stuck with manual audience targeting and bid management: an inefficient approach that wastes both time and money.

Or worse, spending money in the wrong place and not knowing until your bottom line starts reflecting it

For teams managing serious media budgets, this isn't a technical footnote. It's the difference between growth and waste. I've seen companies improve incremental CPAs not by changing their ads or landing pages, but simply by fixing broken tracking systems that were feeding poor signals to ad platforms. The algorithms were there, ready to optimize, but they needed better data to work with.

Five Steps to Troubleshoot What's Broken Now

When tracking breaks, you need a systematic approach to identify and fix the problem. Here's my process:

Step 1: Document Your Event Architecture

This sounds ridiculously obvious, but you'd be shocked how often teams can't answer basic questions like:

What events are we measuring for each ad platform?

When exactly do these events fire?

Where do they fire from? (e.g. cGTM, Meta CAPI, GA4)

What variables do they pass?

Create clear documentation that answers these questions and map out the data flow of the events. A simple flowchart will do. Without this basic alignment, troubleshooting becomes nearly impossible.

Step 2: Understand What Each Event Actually Measures in the Ad Platform

"We had 157 conversions" means absolutely nothing without context.

Attributed conversions is different from all conversions.

Some platforms only show conversions they take credit for like Google Ads. Meta Events Manager, on the other hand, shows all events fired, regardless if they were attributed to Meta. This alone can lead to confusion.

It’s core to look into what’s configured in your ad platform. What is your lookback window? Are you looking into post-click only, or also post-view? If you’re using catalog products, are all products verified?

Together, Steps 1 and 2 are critical to close the gap between marketing and engineering/data teams. I’ve seen engineering teams adamant that “tracking was working” because they completed step 1, while ignoring the impact of Ad Platform configuration in how events are measured.

Step 3: Compare Against Your Source of Truth

You need a reality check: a system that captures what actually happened, not what platforms claim happened.

For purchases, this is your order database. For leads, it's your CRM. Whatever it is, identify this source and use it as your benchmark.

Just don't expect perfect matches. That's not how this works. Discrepancies will always exist. I mostly use this to confirm that events are not double firing or duplicating.

Step 4: Verify Events Are Actually Firing When They Should

Before you can fix attribution, you need to confirm your events are firing when they should. Here are different ways to test them:

GA4 Debug View: Navigate to Admin → Debug View in GA4. Perform the conversion action on your site while Debug View is active. You should see the event appear in real-time.

Meta Test Events: Go to Events Manager → Test Events. Watch for the event to appear in the testing interface.

Chrome Network Console: Open Developer Tools → Network tab. Filter for your tracking domain (gtm, facebook, etc.). Perform the conversion and look for the HTTP requests being sent.

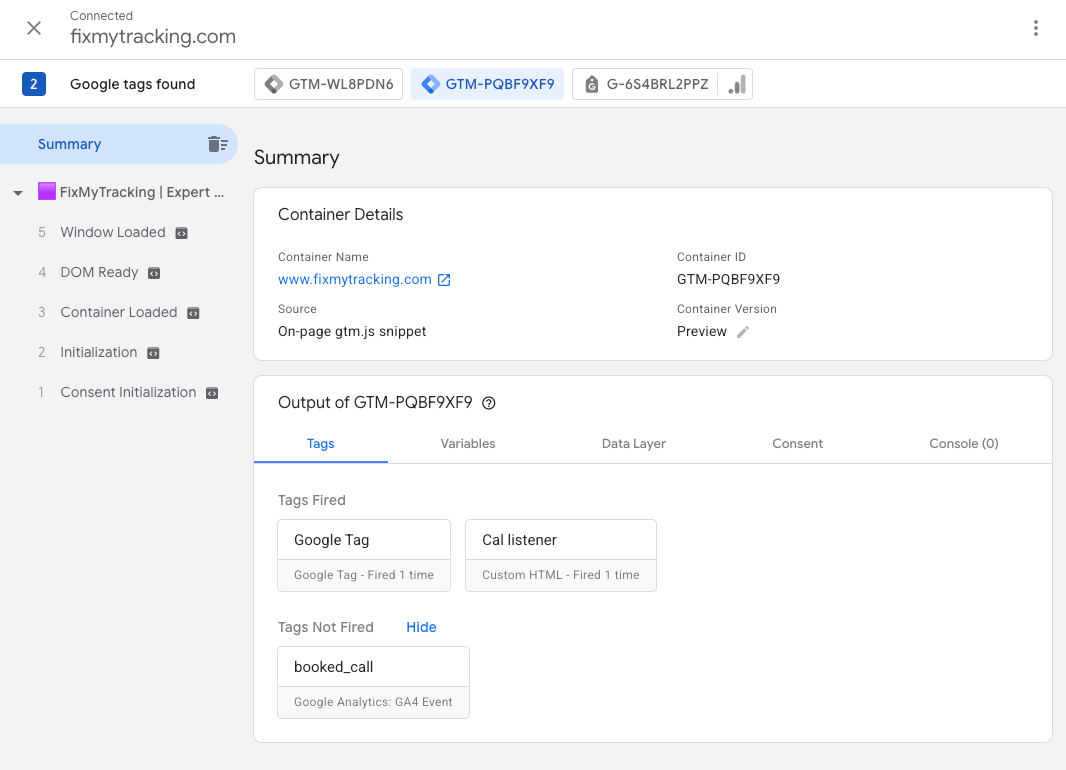

GTM Preview Mode: Click Preview in GTM, then navigate to your site. The preview pane shows exactly which tags fire and when.

I wrote here on how to make these steps easier with AI.

Step 5: Check Attribution

An event can fire successfully but still fail to attribute properly. It’s important to make a distinction here, specially when developers are saying “everything is firing as it should”.

Common reasons for “broken attribution” are:

UTM Parameter Issues: Your events might fire, but if UTM parameters are missing or named incorrectly, platforms can't attribute conversions to campaigns. Check that utm_source, utm_medium, and utm_campaign are properly formatted and consistent.

Consent Management: Privacy settings can prevent events from attributing even when they fire. This is especially problematic in Europe where consent rates can be below 50%. I’ve seen this be a common cause for MMP hiccups.

Four Activities To Improve the Speed and Confidence of Troubleshooting

Once you've fixed immediate issues, invest in systems that make future troubleshooting easier:

Activity 1: Store Raw Data of Your Source of Truth

Sometimes an event breaks in specific user journeys that basic debugging won't reveal.

In these cases, raw data becomes your savior. Set up GA4 for BigQuery or store your MMP data in your warehouse. Server-side tracking can offer a major advantage here. If you’re using only client-side and that’s your source of truth, then make sure to log these events.

From a business perspective, investing in proper data storage isn't just a technical nice-to-have. It's insurance against future diagnostic challenges. When a client implements comprehensive raw data collection, troubleshooting time typically decreases significantly.

Try as best as you can to centralize your data tracking in one place. If you only have to manage a single GTM container, one Segment account or if you send everything from BigQuery directly, then the risk of discrepancies (and double counting) also decreases.

Activity 2: Pass Identifiers for Isolation

Debugging without proper identifiers is like searching for a specific grain of sand on the beach.

Pass unique variables like transaction_id, opportunity_id, and user_id across your systems. These become crucial for pinpointing exactly where and when failures occur.

The organizational impact here is significant: when marketing, product, and data teams share consistent identifiers, cross-functional diagnostics become more efficient.

Activity 3: Build Proactive Monitoring

Don't wait for stakeholders to notice tracking problems. Build systems that alert you first:

Timeline Event Count Reports: Create basic timeline reports showing event counts over time. When counts suddenly drop or spike, something's broken. These visual indicators catch problems that raw numbers might miss.

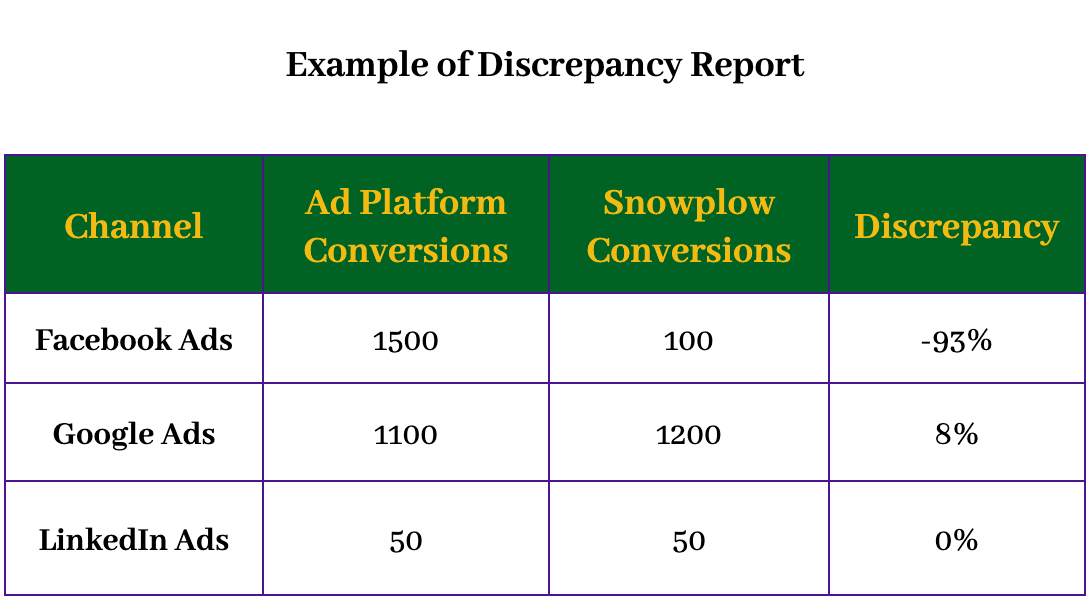

Discrepancy Alerts: Set up automated monitoring between systems. If GA4 and Meta normally differ by 15% but suddenly show a 40% gap, investigate immediately. These alerts become your early warning system.

Regression Tests: Work with engineering to turn a simple “When this happens → What should happen” sheet into automated test cases. These run with every release to confirm marketing events still fire correctly—preventing new features from silently breaking your tracking.

The key insight here is that regular monitoring is far less expensive than emergency troubleshooting. A simple weekly review of key tracking metrics can prevent major crises.

Activity 4: Simplify to Reduce Failure Points

Tracking will break again. It's inevitable. Minimize the damage with these approaches:

Track only what's absolutely necessary. Every new event increases maintenance complexity. Fewer tracking points = fewer potential failures.

This relates directly to one of my core beliefs: most marketing problems are workflow issues, not tool problems. Complex tracking setups create process challenges that technical solutions alone can't solve.

Tracking Quality Determines Campaign Performance

Data isn't useful if people don't trust it, and broken tracking creates both trust and technology problems.

The true cost of broken tracking isn't just technical. It's that you're forced back into manual campaign management instead of leveraging the sophisticated algorithms that could be optimizing your spend.

Without reliable conversion signals, you lose access to the best technology ad platforms offer. Smart Bidding becomes unreliable. Lookalike audiences lose their effectiveness. Campaign Budget Optimization can't function properly. You're essentially paying premium prices for advanced platforms while using them like basic display networks from 2010.

Get this right, and everything else becomes possible. Get it wrong, and you're not just guessing expensively. You're abandoning the algorithmic advantages that separate winning campaigns from wasteful ones.

Do you need help fixing your tracking?

I’ve recently launched FixMyTracking, a conversion tracking auditing service. It’s as simple as it sounds: I investigate the events from your paid campaigns and prepare clear fixing instructions and recommendations for you to share with your engineering team.