Are You Still Running Paid Media Like It's 2015?

If you're oversegmenting campaigns and underestimating the role of conversion events, then get yourself comfortable and read this article.

Most marketing teams have upgraded their tools but not their playbook. Platforms now decide who to show your ads to, where, and when. And yet most campaign setups still look like it’s 2015. This piece breaks down what’s changed, why your conversion signals matter more than ever, and how to rebuild your campaigns for 2025 performance. This article was based on a talk I did, and you can download the presentation here.

First, let’s go back to 2015

In 2015, I was leading growth at HER, a dating app where we scaled from 2,000 to 1 million users. Every morning started the same way: open the campaigns, check the CPIs, adjust bids on the different ad groups segment per audience, pause the underperforming ads, boost budget on the campaigns that were crushing.

We had persona decks that could wallpaper an office. We knew exactly which Facebook pages our users liked, what time of day converted best, which creatives would have the highest CTR. We split-tested everything, manually tweaked bids at least twice daily, and felt genuinely in control of our campaigns.

That was 2015. Platforms have changed completely since then, but most marketing teams haven’t.

We’ve moved from controlling campaigns to feeding algorithms. Yet we’re still setting them up like it’s 2015.

How paid media has evolved

The transformation happened gradually, then suddenly. This was already the trend “pre-AI”, but now we’re in fast mode.

What we controlled in 2015:

Built audiences from scratch using granular targeting

Set manual bids down to the penny

Chose exactly which placements our ads would appear on

Made every targeting decision ourselves

Created detailed persona decks

What platforms control in 2025:

Performance Max decides where your Google ads appear across Search, Display, YouTube, Gmail, and Discovery

Meta Advantage+ combines your creative assets and serves them to whoever the algorithm thinks will convert. They go as far as creating assets now.

Broad match with tROAS lets the platform find customers you never would have targeted manually

Auto-bidding optimizes spend in real-time across millions of signals

The algorithm is now the media buyer. Our job is to teach it what good looks like.

This isn’t a minor shift in tactics. It’s a fundamental restructuring of how paid media works. Platforms don’t want us micromanaging campaigns anymore because their algorithms genuinely perform better when given consolidated data and clear objectives.

But here’s the problem: most teams adapted their campaign types without adapting their approach. They’re running Performance Max campaigns but still thinking like it’s 2015.

There are habits we haven’t yet outgrown

Walk into most marketing teams today and you’ll see the same patterns from a decade ago, just wrapped in newer interfaces.

Over-segmentation remains the biggest culprit

Teams split campaigns into eight ad sets because they want to “test different audiences,” when the platform already has access to billions of signals that make those manual segments obsolete. They’re limiting the algorithm’s ability to learn by spreading conversions too thin across too many containers.

Instead of letting the algorithm optimize for ROAS and shift budget across different campaigns based on performance, marketers are manually defining budget for each audience

Another significant consequence of this is budget allocation. Instead of letting the algorithm optimize for ROAS and shift budget across different campaigns based on performance, marketers are manually defining budget for each audience. This leads to inefficiency.

Siloed testing produces misleading results

Teams test a new creative in a separate campaign, see it perform worse than established ads (because it’s in the learning phase with no historical data), and conclude the creative is bad. They’re drawing conclusions from a fundamentally flawed test setup. The creative might be brilliant, but they’ve handicapped it by isolating it from the campaign’s accumulated learning.

Nowadays, creative is the targeting. There are better methodologies for understanding creative performance.

Attribution obsession consumes countless hours

Teams try to make their Facebook Ads Manager numbers match GA4, then panic when they don’t align. They adjust attribution windows, create custom reports, schedule meetings to discuss the discrepancy.

Meanwhile, they’re missing the fundamental point: your ad platform doesn’t care about GA4. It optimizes based on the conversion signals you send it, not what appears in your analytics dashboard. In fact, I’d go as far as saying: if your Meta and GA4 conversions are matching, then you’re not doing Meta right.

Uniform targets ignore customer value

Teams set a $50 CAC target across all campaigns, treating every customer as equally valuable. But a $500-per-year subscriber and a $5 trial user are not the same. Without value data, the algorithm can’t tell them apart and will happily spend your budget acquiring the cheapest conversions, not the most valuable ones.

Generally, I feel like this is a problem everyone is aware of. But they try to fix it with reporting: by measuring which campaigns/strategies lead to the highest ROAS. The best way to approach this is, however, by feeding this data into the ad platform.

We say we trust the algorithm, but we don’t trust it to learn.

What actually matters now: your conversion signals

Here’s what most marketers get wrong about conversion tracking: they think if their tag fires, they’re good. They check the Facebook Pixel Helper, see the green checkmark, and move on.

But a working tag doesn’t mean you have useful signals. The algorithm doesn’t see your dashboard. It sees your payload.

The four inputs the algorithm actually needs

Platforms need four things to optimize effectively, and most campaigns are missing at least two of them.

1. Sufficient Frequency

Meta’s learning phase requires about 50 conversions per week per ad set. Google’s algorithms need similar volumes. Fire 10 conversions per week and your campaign never exits learning phase, it just perpetually resets, unable to establish patterns or optimize meaningfully.

This is why consolidating campaigns almost always improves performance. You’re not giving the platform less control; you’re giving it more data to learn from.

2. Signal Consistency

If you’re sending the same conversion from both client-side pixels and server-side events without proper deduplication, the platform sees twice as many conversions as actually occurred. Your reported CAC looks fantastic, but you’re teaching the algorithm to optimize toward inflated numbers.

When you eventually fix the duplication, performance appears to crater, but really, you were just measuring wrong the entire time.

3. Attribution Compatibility

Modern attribution requires multiple data sources:

Client-side tracking catches some conversions

Server-side catches others

Proper PII (like email addresses) helps platforms match conversions back to ad clicks even when cookies fail

Teams running only client-side tracking could miss 80%+ of their conversions in a post-iOS14 world. The platform thinks those campaigns perform worse than they do and shifts budget away from them.

4. Value Data

This is the most commonly missing piece. A $500 annual subscriber and a $5 monthly trialist should not look identical to Meta’s algorithm, but without value and currency fields in your conversion payload, they do.

The platform will optimize for volume rather than value, delivering you a steady stream of low-quality, cheap conversions while the valuable customers remain out of reach.

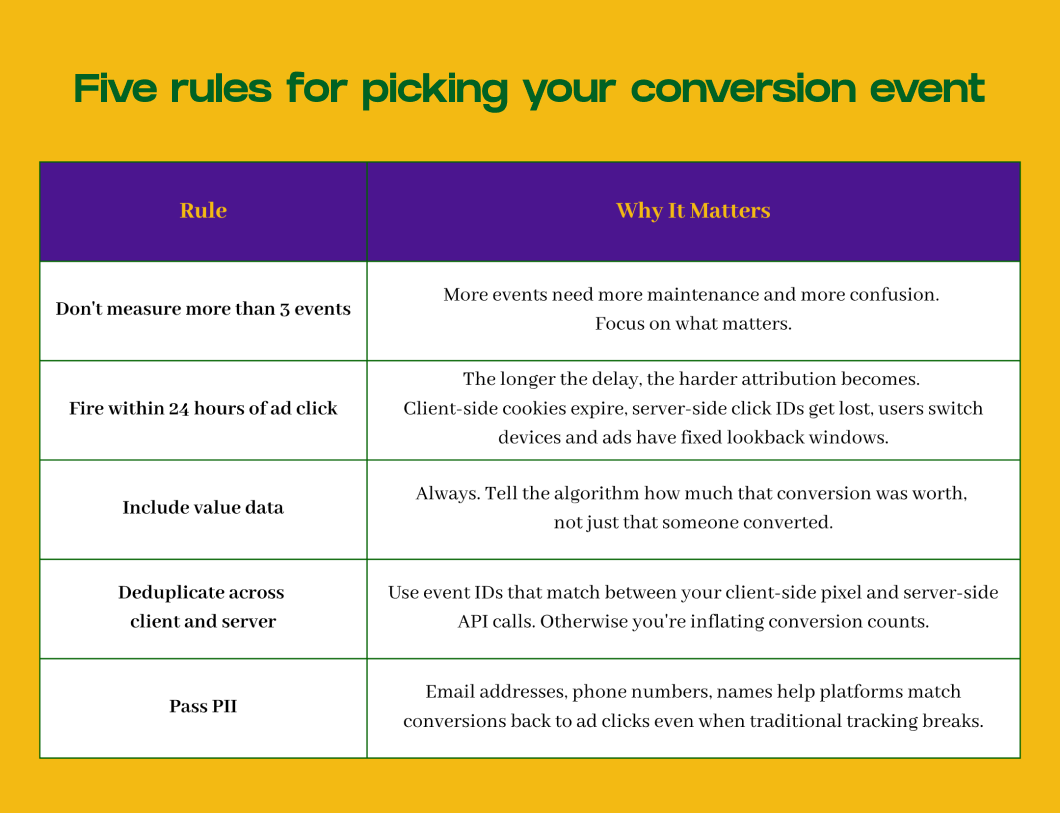

Barbara’s Five Rules for Optimization events

Through eight years of fixing tracking for companies like Microsoft, WeTransfer, and dozens of startups, I’ve developed five rules that consistently separate high-performing campaigns from struggling ones.

The business impact of better signals

These technical details translate directly to business outcomes I’ve seen repeatedly across client engagements:

Performance marketers become strategists again. When your conversion signals work properly, the nature of the job changes. You stop spending hours tweaking bids, debating audience exclusions, or reconciling attribution reports. That time gets redirected toward work that actually differentiates your brand: developing positioning that resonates, creating thumb-stopping creative, testing new value propositions. The algorithm handles mechanical optimization across millions of micro-moments. You focus on strategic decisions it can’t make: what story to tell, which pain points to address, how to position against competitors.

Faster learning phases mean campaigns become profitable sooner. Instead of spending three weeks stuck in learning while burning budget, campaigns with strong signals exit in days. They start optimizing toward real conversions faster, which means less wasted spend during the ramp-up period.

Reduced troubleshooting time might sound minor, but I’ve seen teams spend 15 hours per week debugging why campaigns aren’t performing, only to discover their conversion events were firing inconsistently or missing value data. Fix the signals, and those hours get redirected toward creative strategy and landing page optimization, work that actually moves the needle.

Smarter budget allocation happens automatically when platforms have good data. Instead of spreading budget evenly across campaigns because you don’t trust the platform’s optimization, you can consolidate into fewer campaigns and let the algorithm shift spend toward whatever’s working. I’ve seen this change alone improve efficiency by 30-40% for clients who were previously over-segmented.

Most teams think they need better targeting. They actually need better signals.

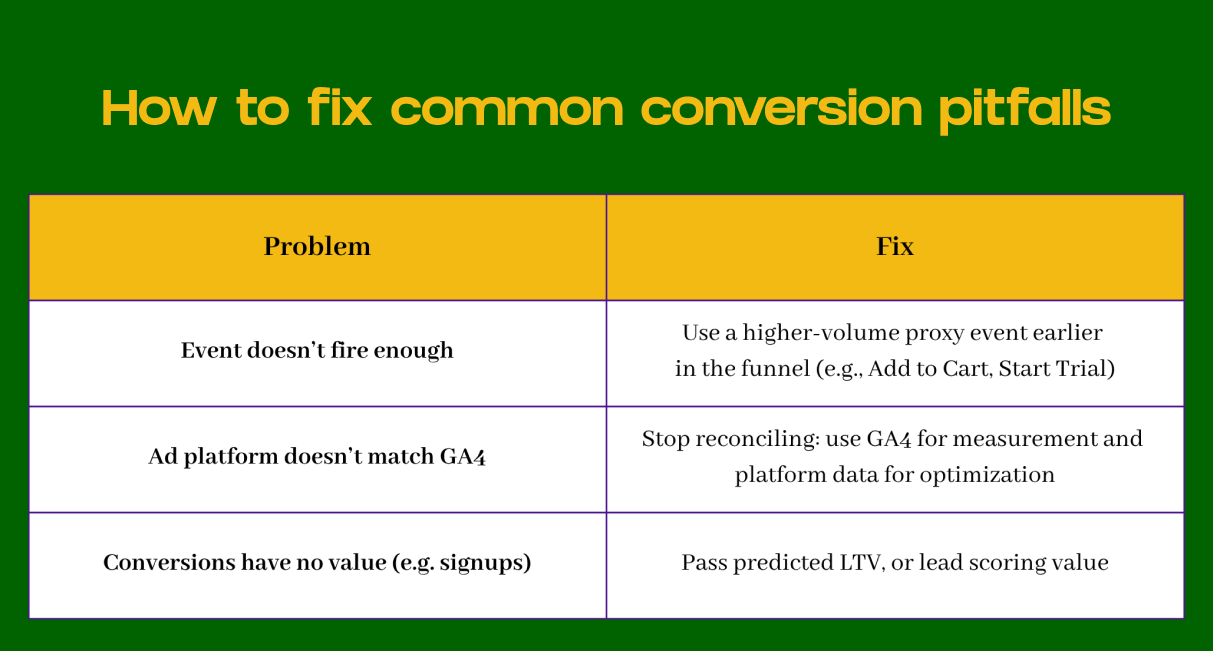

Common conversion pitfalls (and fixes)

Even with the best intentions, conversion signals often go wrong. Here are the issues I encounter most frequently and their practical solutions.

What if my event doesn’t fire enough?

The problem: Your primary conversion event happens too rarely (say, 20 times per week) and you’ll never exit learning phase.

The fix: Use a higher-volume proxy event that occurs earlier in the funnel. For subscription businesses, optimize for “Start Trial” instead of “Subscribe.” For e-commerce with high-consideration products, optimize for “Add to Cart” instead of “Purchase.”

Yes, you’re technically optimizing for a different event, but you’re giving the algorithm enough data to learn patterns. Just make sure you include predicted value data so it knows some add-to-carts are more valuable than others.

What if it doesn’t match GA4?

The problem: Your ad platform’s conversion data doesn’t align with GA4 and you’re spending hours trying to reconcile them.

The fix: Stop trying to make them match. It’s expected. They’re measuring different things through different methodologies.

Optimize for algorithm learning, not report matching. Use GA4 for understanding user journeys and website performance. Use platform conversion data for campaign optimization.

What if it doesn’t carry value?

The problem: You’re sending conversions without any value data attached.

The fix: Always aim to send revenue or value data with your conversions:

E-commerce: Send the transaction value

Subscription products: Send the predicted LTV based on which plan they chose

Lead gen: Assign values to different lead types based on your historical close rates and deal sizes

Mobile apps: Model predicted LTV based on early user behavior, even if it’s not perfectly accurate

An imperfect value signal is far better than no value signal at all.

The mindset shift: optimization ≠ measurement

Many teams conflate optimization with measurement, and it causes endless confusion. They’re trying to use the same data for two different purposes, then getting frustrated when it doesn’t work perfectly for both.

For Optimization: Use Platform Conversions

Facebook Ads Manager, Google Ads, TikTok Ads Manager should receive:

Clean, high-frequency conversion events

Value data attached

Fast implementation (fire within 24 hours)

Comprehensive coverage (don’t worry if numbers don’t match your source of truth)

Their job is to teach algorithms who to show your ads to.

For Measurement: Use Incrementality Tests

Holdout tests, geo experiments, conversion lift studies, marketing mix modeling should:

Tell you whether your ads actually caused incremental business results

Answer the question: is this advertising working?

Guide strategic decisions about overall budget allocation

These are different questions requiring different tools. Your optimization data needs to be fast and comprehensive so algorithms can learn quickly. Your measurement data needs to be accurate and causal so you can make strategic decisions.

Stop trying to make everything match. Start making everything learn.

Beware of the role of data in campaign optimization

If your campaigns still look like they did in 2015, it’s not your creative, it’s your signals.

The platforms have evolved dramatically. The algorithms are genuinely sophisticated now, capable of finding customers and optimizing bids better than we ever could manually. But they’re only as good as the data we feed them.

Most teams are still fighting the algorithm instead of feeding it. They’re over-segmenting campaigns, tracking too many events, missing value data, and wondering why performance has plateaued.

The solution isn’t more manual control. It’s better conversion signals.

I launched FixMyTracking this year specifically to help advertisers fix their ad platform conversion tracking. After eight years of freelancing in marketing data for companies like Microsoft, Veed.io, WeTransfer, and Superbet, I kept seeing the same issues repeatedly: smart teams with sophisticated strategies, held back by broken conversion signals they didn’t even know were broken.

This is really good